Apple on Thursday said it’s introducing new child safety features in iOS, iPadOS, watchOS, and macOS as part of its efforts to limit the spread of Child Sexual Abuse Material (CSAM) in the U.S.

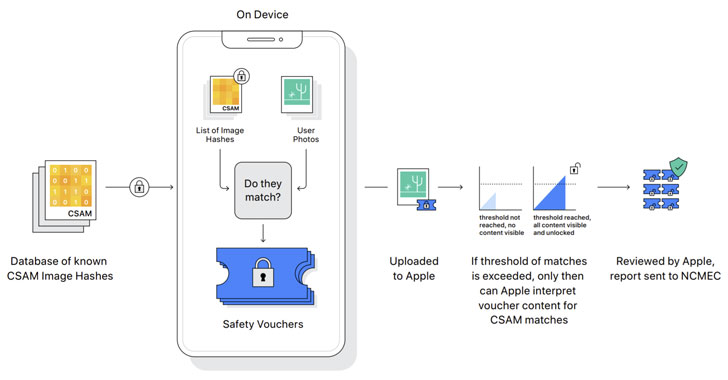

To that effect, the iPhone maker said it intends to begin client-side scanning of images shared via every Apple device for known child abuse content as they are being uploaded into iCloud Photos, in addition to leveraging on-device machine learning to vet all iMessage images sent or received by minor accounts (aged under 13) to warn parents of sexually explicit photos in the messaging platform.

Furthermore, Apple also plans to update Siri and Search to stage an intervention when users try to perform searches for CSAM-related topics, alerting the “interest in this topic is harmful and problematic.”

“Messages uses on-device machine learning to analyze image attachments and determine if a photo is sexually explicit,” Apple noted. “The feature is designed so that Apple does not get access to the messages.” The feature, called Communication Safety, is said to be an opt-in setting that must be enabled by parents through the Family Sharing feature.

How Child Sexual Abuse Material is…

http://feedproxy.google.com/~r/TheHackersNews/~3/9rcyWF6hqNc/apple-to-scan-every-device-for-child.html