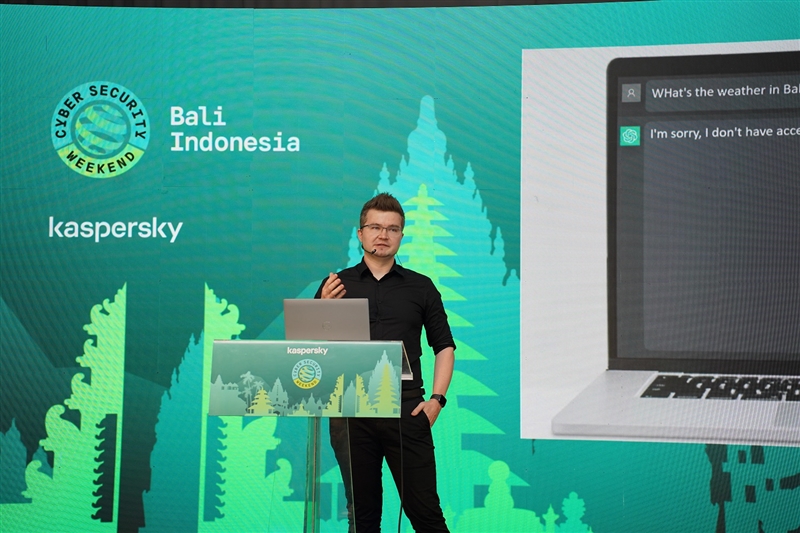

Artificial Intelligence (AI) has undoubtedly revolutionized our world, but with great power comes great responsibility. In a recent revelation, Vitaly Kamluk, the Head of Research Center for Asia Pacific at Kaspersky, has shed light on the potential psychological hazards associated with this groundbreaking technology.

As cybercriminals increasingly harness the power of AI for their nefarious deeds, they are finding a way to absolve themselves of guilt by placing the blame squarely on technology. Kamluk coins this phenomenon as the “suffering distancing syndrome.” Unlike physical criminals who witness their victims’ suffering, virtual thieves operating with AI can steal without ever seeing the consequences. This psychological disconnect with the harm they cause further amplifies the criminals’ detachment, as they can conveniently attribute their actions to the AI itself.

Another intriguing psychological by-product of AI is “responsibility delegation.” As automation and neural networks take over cybersecurity processes and tools, humans may begin to feel less accountable in the event of a cyberattack, particularly in a corporate context. In essence, an intelligent defense system could become the scapegoat, diminishing the vigilance of human operators.

Kamluk outlines several guidelines to safely navigate the AI landscape:

1. Accessibility and Accountability

To maintain control and accountability, restricting anonymous access to intelligent systems built on vast datasets is essential. It’s crucial to document the history of generated content and determine how AI-synthesized content was created. Just as the World Wide Web has mechanisms to address misuse, there should be procedures in place to handle AI misuses and clear avenues for reporting abuses, including AI-based support with human validation when necessary.

2. Regulations for AI Content

The European Union has already initiated discussions regarding the marking of AI-produced content. This labeling allows users to quickly identify AI-generated imagery, sound, video, or text. Licensing AI development activities is also proposed, akin to controlling dual-use technologies or equipment, to ensure responsible use and manage potential harm.

3. Education and Awareness

Education is a powerful tool to combat AI-related challenges. Schools should incorporate AI concepts into their curriculum, highlighting the distinctions between artificial and natural intelligence and emphasizing the reliability and limitations of AI. Software coders need to be educated on responsible technology usage and the consequences of misuse.

Kamluk aptly concludes by acknowledging the dual nature of AI. While it has the potential to create content indistinguishable from human work, it can also be a source of destruction if not handled properly. As long as secure directives guide these smart machines, AI can be harnessed to our advantage.

Kaspersky’s commitment to exploring the future of cybersecurity continues at the Kaspersky Security Analyst Summit (SAS) 2023, scheduled for October 25th to 28th in Phuket, Thailand. This prestigious event will convene anti-malware experts, global law enforcement agencies, Computer Emergency Response Teams, and senior executives from various sectors, fostering a collective effort to address the evolving challenges in the digital landscape.